Puncturable Encryption – A Fine-Grained Approach to Forward-Secure Encryption and More: Part III

In Part I and Part II, we have so far seen why forward security is an important property and discussed the state-of-the-art of puncturable encryption. In the last part of our blog series, we will focus on the applications of puncturable encryption. Finally we will then dive into the details of a concrete implementation which helps to explore the properties of puncturable-encryption schemes.

Applications of Puncturable Encryption

Puncturable encryption (PE) schemes have a number of applications that range from Transport Layer Security (TLS) to encrypted backups. First, we take a look at zero round trip time (0-RTT) key exchange protocols. Second, we explore Cloudflare's Geo Key Manager and how PE could enable both fine-grained access control and forward security. Third, we review the use of PE in SafetyPin, a system for encrypted mobile-device backups.

0-RTT Forward-Secure Key Exchange for TLS

Transport Layer Security (TLS), with TLS 1.3 representing its most recent version, is one of the most widespread protocols for secure communication over untrusted networks. One of the core goals of TLS is to establish shared keys with Ephemeral Diffie-Hellman (EDH) to establish a forward-secure channel.

In TLS, keys are established during the so-called handshake which describes the initial phase of the protocol (cf. Figure 1). First, a client sends a ClientHello message announcing its supported TLS versions, algorithms, etc. This message already includes the client’s EDH key share. When the server receives the client's message, it replies with ServerHello message containing its key share. The server can already compute the shared secret from the EDH key exchange. The client, however, must wait until it received the server’s answer. Once both parties completed the EDH key exchange, the remaining handshake is already performed in an encrypted fashion. Thereby, TLS achieves a forward-secure key exchange but requires at least two messages.

Client Server

Key ^ ClientHello

Exch | + key_share*

| + signature_algorithms*

| + psk_key_exchange_modes*

v + pre_shared_key* -------->

ServerHello ^ Key

+ key_share* | Exch

+ pre_shared_key* v

{EncryptedExtensions} ^ Server

{CertificateRequest*} v Params

{Certificate*} ^

{CertificateVerify*} | Auth

{Finished} v

<-------- [Application Data*]

^ {Certificate*}

Auth | {CertificateVerify*}

v {Finished} -------->

[Application Data] <-------> [Application Data]TLS 1.3 added a new feature that allows clients and servers to perform a reduced handshake whenever they have already performed one full handshake before. Then, the client is already allowed to send encrypted data in its first message (cf. Figure 2). This is achieved by storing a shared secret at the end of the handshake on both clients and servers. If a client then decides to use this shared secret on the next connection as pre-shared secret key (PSK), the stored PSK is used to derive the secret keys required for encryption. Note though, that if this key leaks, confidentiality is no longer guaranteed for the data sent as part of the early data.

ClientHello

+ early_data

+ key_share*

+ psk_key_exchange_modes

+ pre_shared_key

(Application Data*) -------->

ServerHello

+ pre_shared_key

+ key_share*

{EncryptedExtensions}

+ early_data*

{Finished}

<-------- [Application Data*]

(EndOfEarlyData)

{Finished} -------->

[Application Data] <-------> [Application Data]As discussed above, EDH requires one round-trip. Only after the two shares have been exchanged, the shared key can be derived and thus a secure channel be established. Recent work has explored alternatives to the PSK-based approach to reduce the number of round trips. In particular, the possibility to reduce the complexity to zero round trips using PE or, more specifically, puncturable key encapsulation mechanisms (PKEM) has been investigated (cf [GHJL17, DKLRSS18]). In such a protocol, the client essentially encrypts a session key with respect to the public key of the server, and then sends it to the server. Thereby, the client can immediately start sending encrypted application data using the session key. The server decrypts the session key and can use the key as well. To achieve forward security, the secret key is punctured on the ciphertext. Consequently, the secret key is no longer able to decrypt ciphertexts from past sessions. Besides providing forward security, puncturing the key also provides replay protection. Besides TLS, these techniques can also be applied to QUIC [DDGHJKNRW20].

This approach requires the public key of the server to be known beforehand by the client. While this is not an issue after the first connection, with the trend to store public keys in DNS entries to support new features such as Encrypted Client Hello, this requirement does not hinder deployment of 0-RTT forward-secure key exchange.

Forward-secure transport of early data can also be achieved by employing puncturable pseudo random functions (PPRF) [AGJ19]. For this approach, the idea is that client and server store a PPRF secret key on the first full handshake. When performing a session-resumption handshake with early data, this stored state is then used to derive forward-secure keys. Compared to the PKEM-based approach, both, client and server, need to puncture their PPRF keys.

Forward Security for the Geo Key Manager

As the use of TLS for securing communication on the Internet grows, content distribution networks (CDNs) such as Cloudflare face new key management issues: all of their endpoints terminating TLS connections deployed in colocations all over the world need access to the secret keys associated to the certificate (i.e., public key) to guarantee low latency. As those secret keys might belong to the customers, they need to provide the keys to the CDNs or deploy solutions such as Keyless SSL, where customers are required to run their own keyserver answering signing requests from the CDN. The latter comes at the cost of higher latency if endpoints or users are not close to the location of the key server. The former faces a different issue since due to various differences in local laws or other regulations surrounding the use of secret keys, customers might not be interested in having their keys exposed to certain locations and areas.

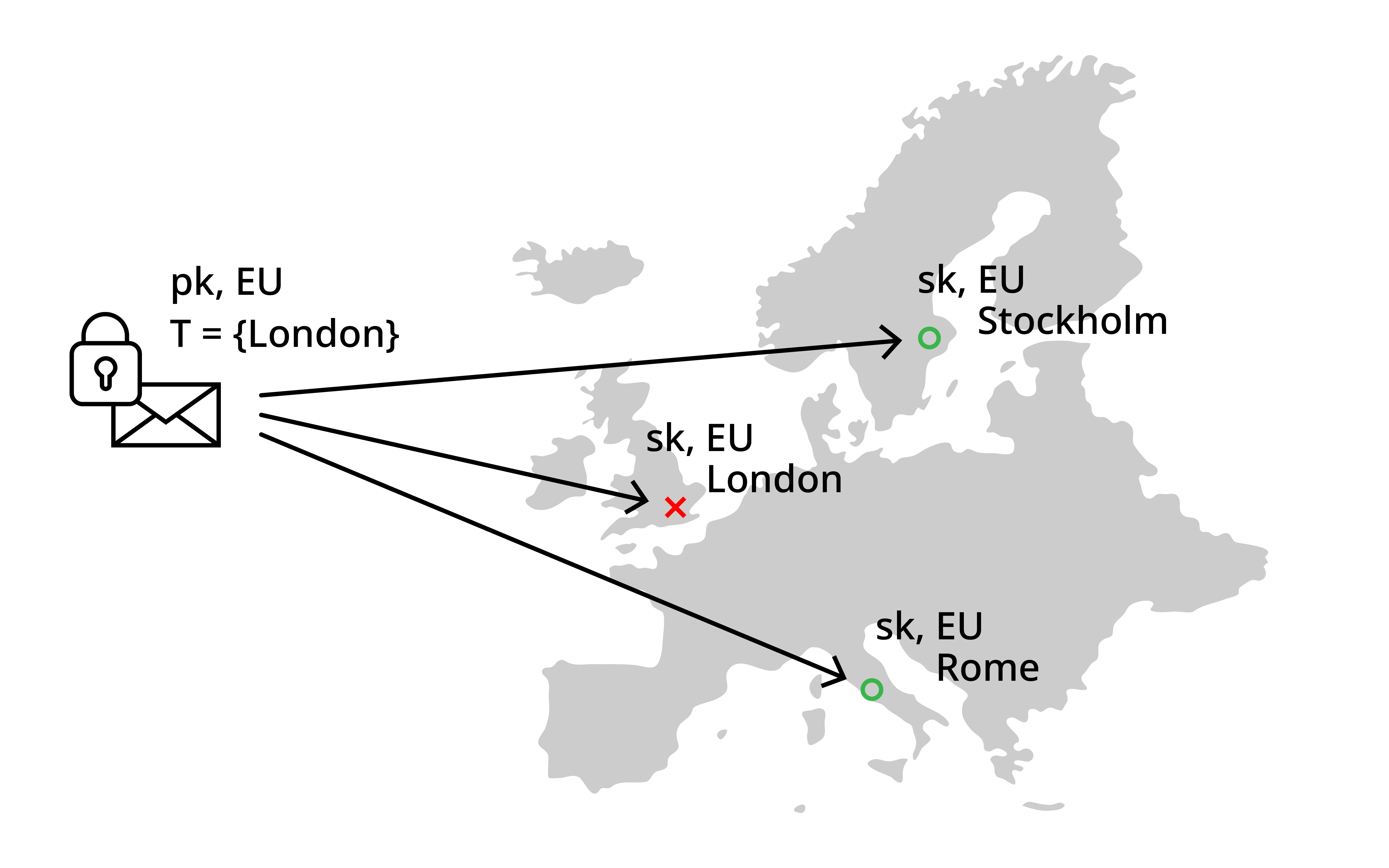

Cloudflare's Geo Key Manager tackles this issue by giving customers the control on the locations their secret keys are stored when shared with Cloudflare (cf. Figure 3). Effectively, customers are able to put whole regions on allow-lists, e.g., Europe or the US. At the same time, they are able to put multiple colocations within those regions, e.g., London in Europe, on deny-lists. Finally, they are also able to directly put colocations on the allow-list that are not inside the regions already on allow-lists, e.g., Singapore. The currently used system combines identity-based broadcast encryption (IBBE) as well as identity-based revocation (IBR), but does not offer forward-security guarantees. Hence, if the keys of any colocation leak, the customer's secret keys are in danger of being leaked as well.

One can now obtain the same functionality also from PE schemes that support both negative and positive puncturing (cf. [DRSS21]). The idea is to manage region-based access using positive tags and denial of individual colocations by puncturing on unique negative tags assigned to each colocation. Next, one would derive keys for each region by using the name of the region as positive tag. Each colocation is assigned a unique negative tag and hence they receive the secret key positively punctured on the region and negatively punctured on that colocation specific tag. If customers now want to store their secret key, they encrypt the key for each allowed region using the region as positive tag and the denied colocations of the corresponding region as negative tags. If a colocation needs to access the key, it can only decrypt if one of the ciphertexts was encrypted for the region and that particular ciphertext was not tagged with the negative tag of the colocation. They are unable to decrypt ciphertexts for other regions, since they do not have access to the positively tagged keys.

If the PE scheme supports puncturing with respect to multiple negative tags, one can achieve even more. In that case, one can additionally obtain forward security as an additional feature. The tag space can be partitioned into one part containing the colocation (i.e., negative) tags, and another part identifying time periods by viewing this part as ordered sequence. Thereby, customers can specify a time epoch as additional negative tag, say 2021-04 for ciphertexts decryptable in April 2021. Once the month passed, all colocations puncture the secret keys on the month's tag and are then no longer able to decrypt those ciphertexts.

Bloom-Filter Encryption in SafetyPin

SafetyPin [DCM20] is a system for encrypted backups of mobile devices which uses only (short-digit) PINs at the user's side and several hardware security modules (HSMs) protecting user data at the server's side. No single HSM is capable of retrieving all the user data. Essentially, the motivation is that if one backups mobile-device data to the Cloud, one does not want the Cloud provider to gain access to it. The current state of the art (as used by Google for example) is to use a HSM which has public and secret keys (\(pk_{HSM},sk_{HSM}\)) with \(sk_{HSM}\) baked into the HSM and thus even the Cloud provider cannot access it. Now, for a backup, one encrypts a PIN and a symmetric key \(k_{SYM}\) under the public key \(pk_{HSM}\) of the HSM as \(C_{B}=Enc(pk_{HSM},PIN|k_{SYM})\) and also sends the backup data encrypted under \(k_{SYM}\) as \(E(k_{SYM},data)\) to the Cloud provider.

When one has lost the mobile device and wants to recover the backup to a new device, one send the PIN under the HSM public key \(pk_{HSM}\) to the Cloud provider as \(C_{R}=Enc(pk_{HSM},PIN)\). The Cloud provider sends both, \(C_{B}\) and \(C_{R}\) to the HSM which decrypts and compares the PINs. If the PINs match and only a limited number of trials was performed, then \(k_{SYM}\) is returned to the user, which then allows to decrypt the backup data. In any case, the HSM can be seen as a single point of failure (and attacks on HSMs are reasonable and happening).

SafetyPin now mainly does two things. First, retain the scalability offered by today's (PIN-based) mobile backup systems and, second, protect against HSM compromises. This can be solved by applying threshold cryptography and in particular secret sharing in a way that some pre-defined number of HSMs out of all the HSMs are needed to recover the backup. Below a certain threshold of HSMs, no data is leaked. However, during recovery, the adversary can observe which HSMs were used and particularly target those subset of HSMs.

To mitigate such attack, the authors make use of puncturable encryption and in particular Bloom-Filter Encryption (BFE) to protect against future compromises. Interestingly, as we have seen, secret keys are large in BFE and usually will not fit into a small HSM. The authors cleverly outsource the puncturing of the secret key in a tree-like structure to the Cloud such that only logarithmically many read-and-write accesses are necessary to update the key, which yields very efficient puncturing. Moreover, this results in a very short key the HSM has to hold while achieving forward-security guarantees at the same time.

We recommend to watch the excellent talk by Henry Corrigan-Gibbs given at the ViSP Distinguished Lecture Series [C21] on SafetyPin.

Implementation of Bloom-Filter Encryption

Finally, let us look at an implementation of a concrete PE scheme. In particular, we will demonstrate the use of Bloom-Filter Encryption (BFE) [DJSS18,DGJSS21] for forward-secure 0-RTT key exchange protocols. The example demonstrates how BFE can be used to establish a shared secret between a client and a server that is based on the BFE implementation available as part of pyrelic. We note that strictly speaking, we realize a Bloom-filter key encapsulation mechanisms (BFKEMs), but will call it BFE for the sake of simplicity.

First, we have to select parameters for the Bloom filter. Let us assume that we have a server that has to handle one new connection per second. If we want the server's public key to last for three months, a possible choice for the size of the Bloom filter is \(n = 524288 = 2^{19}\) with a false-positive probability of \(p \approx 2^{-10}\) (corresponding to the decryption error in BFE). Now, we can use the keygen function to generate a new key-pair for this choice of parameters:

import bfe

# Generate a key-pair

sk, pk = bfe.keygen(

32, # size of shared secret key

2 ** 19, # Bloom-filter size

0.0009765625, # false-positive probability

)

The key generation is the most expansive part of this scheme and will require some minutes to finish. Once it has completed, the public key pk can be shared with the clients. If a client now wants to establish a new connection with the server, it needs to encapsulate a new secret key:

# Encapsulate a new key

k, ctxt = bfe.encaps(pk)The freshly sampled secret key k can now be used to derive keys for an authenticated encryption scheme such as AES-GCM:

import os

from cryptography.hazmat.primitives.ciphers.aead import AESGCM

# Encrypt a message using AES-GCM

data = b"a secret message"

aad = b"authenticated but unencrypted data"

nonce = os.urandom(12)

ct = AESGCM(k).encrypt(nonce, data, aad)

The BFE ciphertext ctxt together with the encrypted message ct and the nonce can now be sent to the server. Note that the client does not need to wait for a reply of the server before encrypting its first message.

The server at some point receives the data from the client and decapsulates the BFE ciphertext to obtain the shared secret:

# Decapsulate the key

received_k = bfe.decaps(sk, ctxt)

The most important step is however still missing: the secret key needs to be punctured on the received ciphertext. Only after puncturing sk, forward security is ensured:

# Puncture the secret key on ciphertext

bfe.puncture(sk, ctxt)Decapsulation of the same ciphertext fails after this step. Furthermore, it should be noted that after puncturing, all copies of the key have to be updated. If the old version of the key is kept in a backup or somewhere else in memory, an attacker could potentially obtain an old unpunctured key which voids the security guarantees.

Finally, the received shared secret can now be used to decrypt the messages sent by the client:

# Decrypt the message

received_data = AESGCM(received_k).decrypt(nonce, ct, aad)Now that we know how to use the scheme, let us take a deeper look into its implementation. For the functions that we do not cover in full detail, please take a look at the example available in pyrelic. Without further ado, let's dive into the details. We require three ingredients to implement the BFE:

- Boneh-Franklin IBE [BF01]: The choice of the identity-based encryption (IBE) scheme is central to the instantiation of the BFE scheme.

- Bloom filter: The Bloom filter is required to manage the secret key. We associate an identity of the IBE to each bit index in the Bloom filter.

- Fujisaki-Okamoto transform [F099]: We use the FO-transform to achieve CCA security.

As the scheme uses bilinear groups, we note that the description in [DJSS18] uses multiplicative notation for the group operations. Hence, we present the implementation using the multiplicative interface from pyrelic.

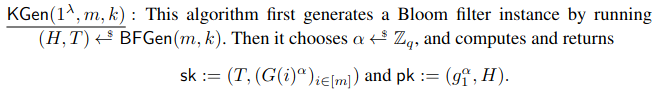

Let us start with the key generation. Recall that a Bloom filter defines a set of hash functions that map elements to an index. Whenever an element is inserted into the Bloom filter, all the bits returned by the hash function will be set. Checking if an element is contained in the set consists of checking whether all bits returned by the hash function are set. In the BFE scheme, we associate an identity of the IBE to each bit in the Bloom filter. Hence, during key generation, the BFE keys are produced by outputting the IBE's public key and derived keys for each bit of the Bloom filter as secret key and the main secret key of the IBE is discarded (cf. Figure 4).

Consequently, we require an implementation of the hash function \(G\) which is provided in map_identity and \(\mathsf{KGen}\) itself:

def map_identity(identity: int) -> G2:

return hash_to_G2(struct.pack("<Q", identity))

def keygen(

key_size: int,

filter_size: int,

false_positive_probability: float

) -> Tuple[PrivateKey, PublicKey]:

exponent = rand_BN_order() # BF secret key

pk = generator_G1(exponent) # BF public key

bloom_filter = BloomFilter(

filter_size,

false_positive_probability

)

return (

PrivateKey(

bloom_filter,

# extract derived keys for all identities

[

map_identity(identity) ** exponent

for identity in range(bloom_filter.bitset_size)

],

key_size,

pk,

),

PublicKey(bloom_filter, key_size, pk),

)

The BloomFilter class computer the optimal number of hash functions based on the size of the Bloom filter \(n\) and the false-positive probability \(p\). It also provides the mapping of elements to its bit indices. When a bit is set in the Bloom filter, we will remove the corresponding key from the array stored in the PrivateKey instance. Conversely, checking if a key is available for an index can be done by checking if the array contains a key or is unset.

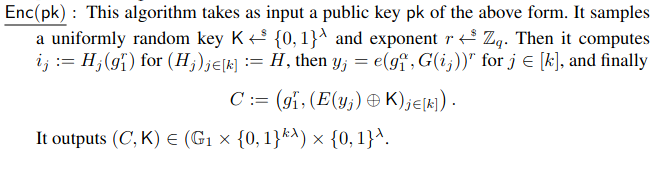

Before we come to encapsulation and decapsulation, we need a method to select the identities. Note that an BFE ciphertext consists of \((g_1^r, E(e(pk, G(id))^r) \oplus K)\) for a freshly sampled \(r\) where \(E: G_T \to \{ 0, 1 \}^\ell\) is a hash function. The idea is now to use the first component, i.e. \(u = g_1^r\), as elements that are used to derived the identities, or in other words, which are collected in the Bloom filter. By hashing \(g_1^r\) with the hash functions associated to the Bloom filter we obtain all the identities.

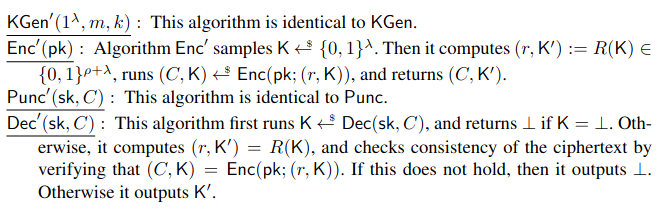

Now let's look at encapsulation and decapuslation. Figure 5 depicts both algorithms as well as the FO transform applied to BFE. The idea here is to first compute \(u\), then use \(u\) to derive the identities. By reusing the same randomness \(r\) for all derived identities, we then produce all \(E(e(pk, G(id))^r) \oplus K\).

\(R\) used in the FO transform is implemented as hash_r. The function hash_and_xor implements \(E(y) \oplus K\). Implementation-wise, there is an opportunity to optimize some of the operations. The scheme computes the input to \(E\) as \(e(pk, G(id))^r)\). Instead of performing the exponentiation with \(r\) in the target group, the computation can be rewritten as \(e(pk^r, G(id))\) which exchanges one exponentiation in the source group with multiple exponentiations in the target group. Let's take a look at the code:

def encaps(pk: PublicKey) -> Tuple[bytes, Ciphertext]:

# sample a random value for FO

key = os.urandom(pk.key_size)

# derive r and k

r, k = hash_r(key, pk.key_size)

u = generator_G1(r)

# instead of applying r to each pairing, precompute pk ** r

pkr = pk.pk ** r

return k, Ciphertext(

u,

tuple(

hash_and_xor(pair(pkr, map_identity(identity)), key)

for identity in get_bit_positions(

bytes(u),

pk.hash_count,

pk.filter_size

)

),

)For decapsulation, we start of by deriving all the identities from the received \(u\). We then check if the secret key contains the derived key associated to any of these identities. If there is one, this key is used to decrypt the corresponding ciphertext component:

def decaps(sk: PrivateKey, ctxt: Ciphertext) -> Optional[bytes]:

# obtain key from one of the ciphertexts

key: Optional[bytes] = None

bit_positions = sk.bloom_filter.get_bit_positions(

bytes(ctxt.u)

)

for v, identity in zip(ctxt.v, bit_positions):

# check if key is available for the identity

if identity in sk:

key = hash_and_xor(pair(ctxt.u, sk[identity]), v)

break

else:

return None

# if we were able to decrypt a key, recompute r, k and the

# ciphertext as in encaps and check that it matches

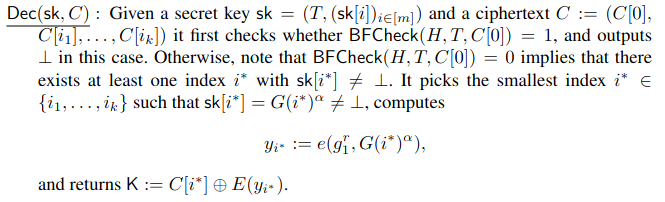

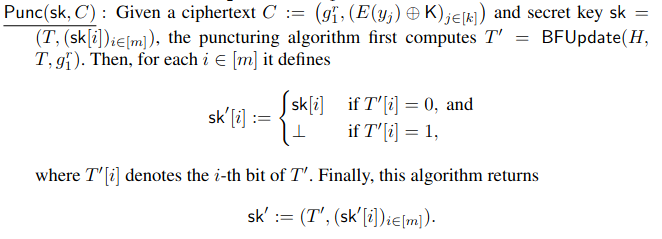

...The last function that we have to implement is the puncturing itself. In the BFE scheme, this means that if we want punctures the secret key on a ciphertext, \(u\) is inserted into the Bloom filter and all derived keys associated to the identities are deleted (see Figure 6).

For our implementation, this means that we just have to delete the corresponding entry in the array kept in the secret key:

class PrivateKey:

def __delitem__(self, identity: int) -> None:

key = self.secret_keys[identity]

if key is not None:

# remove key from the array

self.secret_keys[identity] = None

key.set_neutral() # clear key

del key

...

def puncture(sk: PrivateKey, ctxt: Ciphertext) -> None:

for identity in sk.bloom_filter.get_bit_positions(

bytes(ctxt.u)

):

del sk[identity] # remove the associated secret keyNote, however, that if there are copies of the secret key, all copies have to updated in the same way. Otherwise, the security guarantees obtained from puncturing a single copy are voided. The same care has to be taken if the secret key is stored in a file, for example. During the puncturing process, this copy would need to be updated as well.

This concludes our blog series on puncturable encryption. If you have any comments, suggestions or questions, please feel free to contact us (@sebastinas_, @drl3c7er, @CStriecks).

References

- [AGJ19]: Nimrod Aviram, Kai Gellert, Tibor Jager: Session Resumption Protocols and Efficient Forward Security for TLS 1.3 0-RTT. EUROCRYPT 2019.

- [BF01]: Dan Boneh, Matthew K. Franklin: Identity-Based Encryption from the Weil Pairing. CRYPTO 2001.

- [C21]: Henry Corrigan-Gibbs: SafetyPin: Encrypted Backups with Human-Memorable Secrets. Youtube 2021.

- [DCM20]: Emma Dauterman, Henry Corrigan-Gibbs, David Mazières: SafetyPin: Encrypted Backups with Human-Memorable Secrets. OSDI 2020.

- [DDGHJKNRW20]: Fynn Dallmeier, Jan P. Drees, Kai Gellert, Tobias Handirk, Tibor Jager, Jonas Klauke, Simon Nachtigall, Timo Renzelmann, Rudi Wolf: Forward-Secure 0-RTT Goes Live: Implementation and Performance Analysis in QUIC. CANS 2020.

- [DGJSS21]: David Derler, Kai Gellert, Tibor Jager, Daniel Slamanig, Christoph Striecks: Bloom Filter Encryption and Applications to Efficient Forward-Secret 0-RTT Key Exchange. Journal of Cryptology 2021.

- [DJSS18]: David Derler, Tibor Jager, Daniel Slamanig, Christoph Striecks: Bloom Filter Encryption and Applications to Efficient Forward-Secret 0-RTT Key Exchange. EUROCRYPT 2018.

- [DRSS21]: David Derler, Sebastian Ramacher, Daniel Slamanig, Christoph Striecks: Fine-Grained Forward Secrecy: Allow-List/Deny-List Encryption and Applications. Financial Cryptography 2021.

- [FO99]: Eiichiro Fujisaki, Tatsuaki Okamoto: Secure Integration of Asymmetric and Symmetric Encryption Schemes. CRYPTO 1999.

- [GHJL17]: Felix Günther, Britta Hale, Tibor Jager, Sebastian Lauer. 0-RTT Key Exchange with Full Forward Secrecy. EUROCRYPT 2017.